Lesson 13: Geographic Distribution Strategy

Building Global Twitter Architecture That Actually Works

What We're Building Today

Today we transform our single-region Twitter MVP into a globally distributed system spanning three continents. You'll implement intelligent traffic routing, tackle real-world latency challenges, and understand why Instagram loads instantly in Tokyo but your app might not.

Key Deliverables:

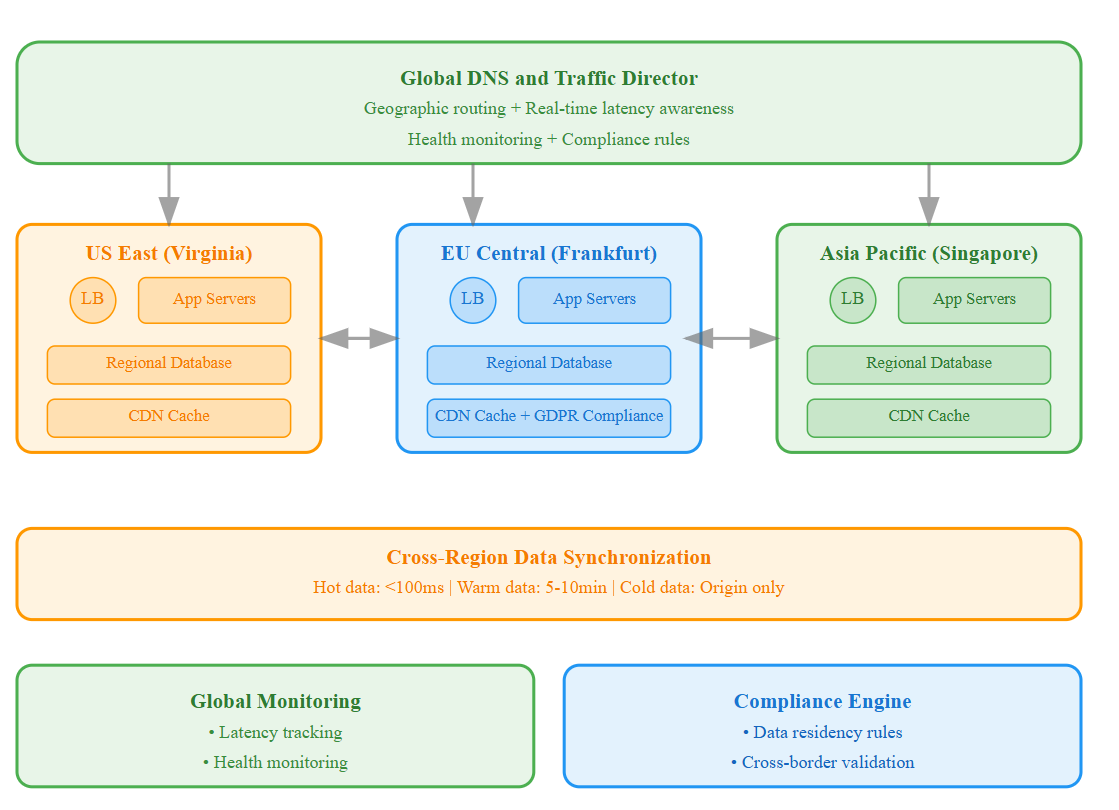

Multi-region deployment across US, Europe, and Asia-Pacific

Intelligent traffic routing based on user location

CDN integration for static assets and timeline caching

Compliance-aware data placement for GDPR and regional laws

Real-time latency monitoring and automatic failover

Core Concepts: The Physics of Global Communication

The Speed of Light Problem

Network packets travel at roughly 200,000 km/second through fiber optic cables. From New York to Tokyo (10,800 km), that's a minimum 54ms each way - before any processing delays. This physical reality shapes every decision in global system design.

Geographic Distribution Patterns

Edge Locations: Serve static content (images, videos, CSS) from locations closest to users

Regional Data Centers: Host application logic and regional user data

Global Coordination: Synchronize critical data across regions while respecting data sovereignty

Latency Impact on User Experience

Research shows 100ms latency increase causes 1% revenue drop for e-commerce. For social media, where engagement drives everything, sub-200ms response times across all regions become critical for user retention.

Context in Distributed Systems: Twitter's Global Challenge

Real-World Scale Reference

Twitter serves 450+ million monthly active users across 190+ countries. A single tweet from a celebrity can generate millions of interactions within minutes, requiring coordination across dozens of data centers.

Component Integration

Our geographic distribution layer sits between the load balancer (previous lesson) and application servers, making intelligent routing decisions before requests reach our business logic.

Week 2 Scale Target

We're targeting 10,000 concurrent users distributed globally, with 90% experiencing sub-200ms response times regardless of their geographic location.