Lesson 28: Building a Twitter-Scale Content Moderation System

What We’re Building Today

You’re about to build the guardian of your Twitter clone - a content moderation system that processes half a million posts every hour while keeping your platform safe. Think of it as your digital bouncer that never sleeps, never gets tired, and learns from every interaction.

Today’s Agenda:

Multi-layer automated content filtering with ML models

Human review workflows for edge cases

Appeal system for contested decisions

Real-time processing pipeline handling 500K posts/hour

Why This Matters in Production Systems

Every major social platform battles the same challenge: how do you moderate billions of posts without killing user experience? Twitter’s actual moderation system processes over 500 million tweets daily using a sophisticated pipeline that combines AI detection, human oversight, and automated appeals.

Your system will mirror this real-world architecture, teaching you patterns used by platforms serving billions of users.

Core Concepts: The Multi-Layer Defense Strategy

The Pipeline Architecture

Content moderation isn’t a single filter - it’s a sophisticated pipeline where each layer catches different types of harmful content:

Layer 1: Pre-publication Scanning - Blocks obvious violations before they reach users

Layer 2: Real-time Monitoring - Continuously scans published content

Layer 3: Community Reporting - Leverages user reports for contextual violations

Layer 4: Appeal Processing - Handles contested moderation decisions

The Detection Engine

Your ML models work like specialized detectors:

Text Classifier: Identifies hate speech, spam, and misinformation patterns

Image Analyzer: Detects inappropriate visual content using computer vision

Behavioral Detector: Spots coordinated inauthentic behavior and bot networks

The Human-AI Collaboration

Pure automation fails at scale because context matters. Your system implements a hybrid approach where AI handles clear-cut cases while humans review ambiguous content, creating a feedback loop that continuously improves detection accuracy.

Context in Distributed Systems

Integration with Your Twitter Architecture

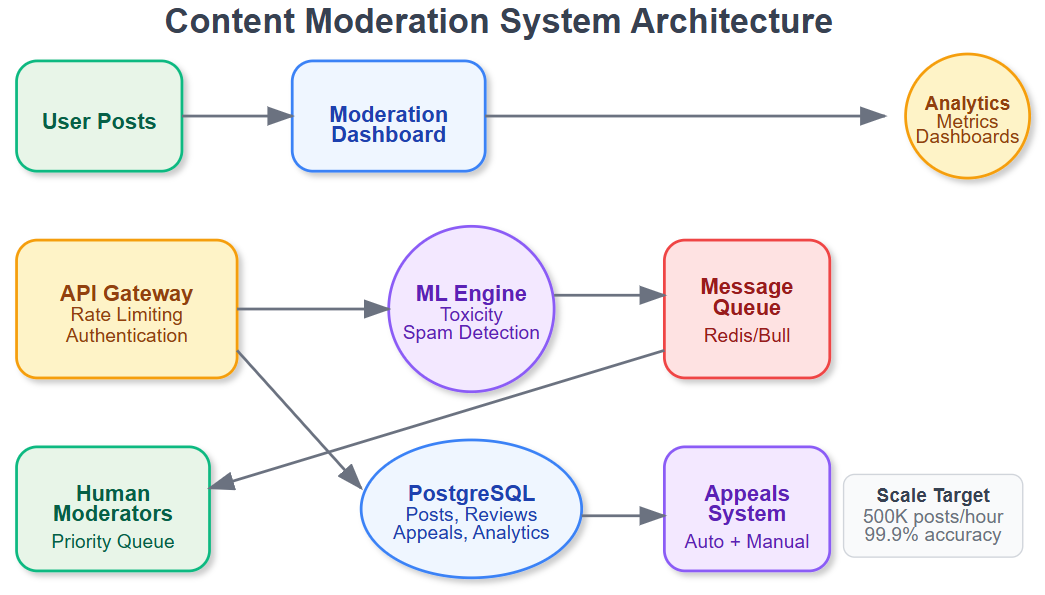

Your content moderation system sits between your stream processing pipeline (Lesson 27) and your search infrastructure (Lesson 29). It receives posts from Kafka streams, applies ML filtering, routes complex cases to human reviewers, and feeds clean content to your search indexes.

Data Flow Integration:

User Post → Stream Processing → Content Moderation → Search Indexing → User Feeds

Scalability Challenges

Processing 500K posts/hour means your system must:

Handle traffic spikes during viral events

Maintain sub-second response times for real-time filtering

Scale human review capacity dynamically

Process appeals without creating bottlenecks

Architecture Deep Dive

Component Architecture

Content Ingestion Service - Receives posts from Kafka streams and initiates moderation workflow

ML Detection Engine - Runs multiple specialized models in parallel for comprehensive content analysis

Human Review Queue - Manages workflow for human moderators with priority routing and workload balancing

Appeal Processing System - Handles user appeals with automated and manual review stages

Decision Engine - Coordinates between automated and human decisions, maintaining audit trails

Notification Service - Informs users about moderation actions and appeal outcomes